AI and cloud traffic surged, driving inter-data-center bandwidth purchases up 330% from 2020 to 2024. By 2025, operators moved past 400G, with 800G becoming the mainstream, and early pilots pushing into 1.6T 224 Gb/s PAM4 links. Yet supply has lagged demand. In early 2024, primary North American markets showed only 2.8% vacancy. Switch ASICs now integrate HBM and extend fabrics up to 60 miles to feed AI clusters. At the core, everything still depends on the optical transceiver, which converts terabit electrical signals into low-loss photons at far lower energy.

Technology Foundations of High-Speed Optical Transceivers

Links can carry 100-200 Gb/s on a single lane, hike symbol rate, and stack new techniques. PAM4 encodes two bits per symbol and doubles throughput but shrinks the eye, so low-noise drivers and DSP equalizers are key. Coherent methods twist amplitude, phase, and dual polarizations so DSP can unwind dispersion after the fiber. Silicon photonics merges lasers, modulators, and detectors on CMOS wafers, cuts power and size, and enables dense co-packaged engines. An optical transceiver path leads to 800G, 1.6T, and even more ports on standard glass.

800G Takes the Lead, 400G Remains Deployed

Deployment and Use Cases

Hyperscale cloud providers—including AWS, Azure, Google, and Meta—are the largest users of pluggable optics. Their massive data centers rely on metro and long-haul optical networks that demand steady bandwidth upgrades and backward-compatible hardware. With traffic growing at more than 30% per year, these operators now deploy both 400G and 800G optical transceivers across leaf-spine and spine-core fabrics. ZR and ZR+ variants extend capacity over point-to-point DCI links between nearby sites, while DR4, FR4, and AOC options support flexible rollouts. Together, these deployments establish 400G and 800G as the default choices for new racks.

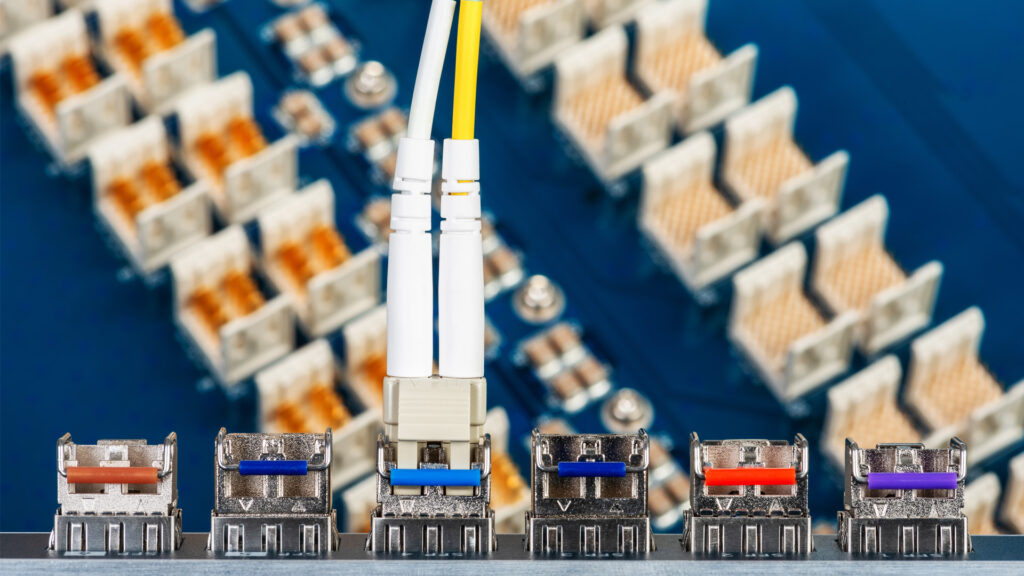

Form Factors: QSFP-DD and OSFP

QSFP-DD maintains the familiar QSFP shape and enables high port density on switches. Current QSFP-DD modules draw at least 12 W, with designs targeting up to 25 W in the DD800 generation—pressuring cooling limits. OSFP is slightly larger and includes a built-in heat sink, allowing operation around 15 W without throttling. Both form factors can support 8 × 50G PAM4 lanes, while DR4 and FR4 variants deliver 4 × 100G. Common options also include LR4 and SR8 optics.

Why the Industry is Eyeing 800G

AI clusters and edge fabrics can already saturate 400G uplinks. Signal loss over copper grows at 50G-lane speeds, and higher wattage tightens airflow inside racks. Early 800G pluggables break out into two 400G channels to ease migration. The next-generation optical transceiver must hit 800G within power limits while 100G-lane switch silicon and IEEE 802.3df specs mature.

800G Optical Transceivers: Emerging High-Density Solution

Key Enabling Technologies

Today’s 800G optical transceiver uses next‑generation DSP chips and high‑speed VCSEL lasers. Leading DSPs use the latest process nodes to integrate multi‑lane PAM4 handling and VCSEL drivers on‑chip. That lowers power and improves signal uprightness. The high‑speed VCSELs deliver reliable short‑reach optical links and support compact module designs.

Typical Applications

AI training clusters love fat pipes. A single 64 x 800G switch builds a non-blocking fabric for many GPUs. Edge and core routers also pair two 800G ports to make easy 1.6T uplinks for spine layers. Ethernet memory-pooling projects run DRAM over 800G links to feed inference servers.

Deployment Challenges

Most pluggables draw 16-18 W, along with pressuring switch faceplate budgets and airflow. Dense chassis hit higher delta-T, so designers add heat sinks or liquid cooling to keep lasers safe. Interoperability remains tricky when mixing DSP-based and linear-pluggable optics, and that’s why operators lean on the CMIS management layer and MSA compliance tests.

Form Factors

QSFP-DD800 keeps the classic 18.35 mm width and lets switches reuse QSFP cages while doubling lane count. OSFP 800G is 22.6 mm wide and sheds heat through a taller top fin, which gives it a bit more thermal headroom. Both shells host the optical transceiver electronics without breaking existing fiber pin-outs, so operators can mix and match as needs grow.

1.6T Optical Transceivers: Future-Proofing Network Infrastructure

CPO and Silicon Photonics Integration

Co‑packaged optics places silicon photonics’ dies physically close to switch application-specific integrated circuits. That helps decrease the electrical bottleneck between chip and optics. Silicon photonics integration brings modulators, lasers, and DSP on one platform. Collectively, they empower high‑density, low‑latency links in 1.6T optical transceiver modules.

Standardization Progress

IEEE 802.3df defines 200G and 400G lanes that aggregate to a 1.6 Tb/s Ethernet port. The in-progress 802.3dj draft adds longer reaches and signal classes. OIF’s CEI-224G project locks down jitter masks and test procedures so 224G SerDes from different vendors interoperate. Tools exercise full-rate ports against these drafts to help vendors hit the moving target.

Application Scenarios

AI training clusters with tens of thousands of GPUs crave 100 Tb/s fabrics, which is why cloud builders are sampling 1.6T modules. CPO switch ports can cut network power in such “AI factories.” Hyperscalers also eye 1.6T pluggables for metro DCI. Edge sites could fold four 400G uplinks into one optical transceiver to ease fiber management for edge-to-core traffic.

Technical Barriers

At 224 Gb/s, equalization, jitter control, and low-loss laminates dominate the signal-integrity budget. CPO packages pile ASICs, photonic dies, and lasers together to create tight thermal envelopes and difficult fiber alignment. Multi-die yield penalties keep module cost premium, and each advanced package still feels like a custom project. Extra FEC blocks and retimers burn power, so designers juggle reach gains against the energy budget set in IEEE studies.

3.2T Optical Transceivers: The Next Frontier

Emerging Technologies beyond 1.6 Tb/s

Researchers are investigating ways to move past 1.6 Tb/s optical transceiver limits. One path is pushing electro‑optic bandwidth toward more than 100 GHz using new modulators, including thin‑film lithium niobate or quantum‑confined Stark effect EAMs, along with silicon photonics. Another route is shifting to coherent modulation formats such as offset‑QAM‑16 or DP‑16QAM, which can empower around 400 Gb/s per lane to reach 3.2 Tb/s with fewer lanes.

Silicon Photonics and Co-Packaged Optics

The push to put lasers beside the switch ASIC is formalized in the OIF 3.2 Tb/s CPO spec, which spells out thermal, optical, and electrical rules for a palm-sized module. Some platforms already show 1.6T and 3.2T silicon-photonics engines soldered onto substrates to avoid a pluggable optical transceiver. Integrators say CPO lowers faceplate I/O and watts per bit versus OSFP-XD.

Where 3.2T Fits

The next‑gen optical transceiver designs support huge data demands from AI clusters and cloud hyperscale fabric. AI accelerators may need two 800G ports per GPU today for greater pressure for 1.6T or 3.2T interconnects. Global hyperscale data centers and future 5G/6G backhaul networks demand higher density and low‑latency links, so such transceivers are a key enabler.

Empowering Next-Generation Networks with FICG Optical Transceivers

Use-Case Snap-Match

Campus backbones stop at 400/800G SR-/FR-class links, metros eye 800G-1.6T coherent pluggables, long-haul shifts to 800G ZR+ and early 1.6T ZR, and hyperscale leaf-spine fabrics are rolling out 800G today and budgeting rack power and thermal headroom for 1.6T and 3.2T.

Visit FICG Optical Transceivers to explore our full portfolio of 400G, 800G, 1.6T, and 3.2T solutions. As a leading electronics manufacturing service provider, FICG specializes in optical transceiver production, leveraging advanced SMT, PCBA, COB, CPO, and silicon photonics (SiPh) technologies. With proven expertise from early SFP modules to today’s 800G and 1.6T platforms, we deliver reliable, energy-efficient products for AI, cloud, hyperscale, and next-generation network infrastructures.